Hadoop Projects for Students

The term Hadoop refers to the framework or application that is used in the big data analysis utilizing involving the distributed process. In other words, it has scalability, efficiency, and consistency in the data processing. It adapts the cost-effective servers to enhance the computing from horizontal scaling to make it easy of developing the applications.

This article is going to let you know about the Hadoop Projects for students with all possible facts described in it!!!

Let’s start the overview of Hadoop for your better understanding,

What is Hadoop?

- Hadoop is capable of handling the massive data analysis, storage, and process

- Java is the main programming language used in Hadoop to script and it is the Apache allied open source software

- It is widely used in the social media platforms such as LinkedIn, Facebook, Gmail, Instagram, Twitter, and telegram, etc., and utilized in the offline and batch process

Hadoop can run in the three modes as they follow for your better understanding. They are fully distributed mode, pseudo-distributed mode, and standalone mode, which are described below.

- Fully distributed Mode

- Master and slave configuration files are regulated by the nodes which are facilitated in this mode

- Standalone Mode

- Standalone mode are using the solitary Java function & inbuilt file systems

- Pseudo-distributed Mode

- Entire Hadoop services are configured to the single-node Hadoop

The above listed are consist of all the essential points that are all about the Hadoop overview and its modes. We hope that you are undoubtedly getting the points to develop Hadoop Projects for students. In a matter of fact, our researchers are concentrated on the student’s understanding they made this article with special efforts. It will help you to those needy ones.

Hadoop is comprised of some of the important and subcomponents/elements which is very beneficial to the industrialists and every field of technology doers. Let’s get into the next phase named components of the Hadoop application.

What are the Main Components of Hadoop?

- YARN

- Hadoop MapReduce

- HDFS

Another component of the Hadoop application is followed which are playing an eminent role in the Hadoop application apart from the main components.

- Intelligent Tools

- Drill

- Apache Mahout

- Serialization Tools

- Avro

- Thrift

- Observing & Supervising Tools

- Zookeeper

- Ambari

- Oozie

- Integration Tools

- Chukwa

- Apache Flume

- Sqoop

- Storage Tools

- HBase

- Access Utilities

- Hive

- Pig

The aforementioned are the main and subdivided components of the Hadoop. At this time, our experts wanted to reveal to you the principles consisted in Hadoop technology for your better understanding. Are you interested in moving on further? Yes, we know that you are getting curious! Here we go.

Principles of Hadoop

- Data Security & Reliability

- It also manages the hardware issues utilizing its reliability

- Accessible Interface

- It offers the user interfaces to ensure the structured workflow

- Code to Data Transmission

- Dataset is subject to cybersecurity attacks thus Hadoop moves the code to the local data hence data storing and preprocessing are done properly

- Scalability

- Permitting the additional devices to the network is possible through scaling down

- Fault Tolerance

- Flopping up of the nodes are patched up by the Hadoop by their fault tolerance feature

The aforementioned are the major principles encompassed in the Hadoop technology. Hadoop technologies are capable of handling the various problems that arise in the real world. Some of the interesting problems that have been solved by Hadoop are demonstrated by our researchers for the ease of your understanding. Shall we get into that? Come let’s have the handy notes on it.

Hadoop Supports Big Data?

- Analysis of Text

- Scam / Spam Filtering

- Search Engine Optimization

- Query Formulation / Information Retrieval

- Click Technology Analytics

- User Behavior Analysis

- Behavior Analysis of Customers

- Log Patterns

- Post and Reports

- Correlating Risks

- Predetermined Risk Designing

- Discovering Suspicious Activities

- Recommender Systems

- Recommending Products, and Movies

- Smart Options in Filtering

Deep Learning of technology seems to be difficult but doing experiments and research will yield you the best results in implementing Hadoop projects for students. While doing real-time projects you will come to know every aspect comprised of the technology. In this regard, we are going to demonstrate to you the real-time applications in the immediate passage for the ease of your understanding.

Hadoop Real-Time Applications

- Analysis of Emotions

- We can analyze the data with the help of Hadoop applications in which data is getting through from Kafka

- This process identifies the text phase and segments them according to the emotions

- Location Privacy Control in Vehicles

- We can track the location of the vehicle by using the Apache Flink & MapReduce

- Even it facilitates to assume the vehicles forthcoming

The above listed are some of the real-time applications using Hadoop projects for students. Apart from this, there are multiple applications are there. Here we listed the things for your reference. If you still need any clarifications or explanations you can approach us at any time. Our researchers are always there to assist you in the emerging technology concepts.

Our researchers are always skilling up them in the technical updates. Hence they know the innovations and prerequisites of the technology. As we are a company of experts who are serving around all over the world, we deliberately know the industry’s expectations. In the forthcoming passage, our researchers have listed the innovative Hadoop projects for students.

Hadoop Projects Ideas

- Log Analysis of Hadoop

- Big Data, Cloud & IoT Safety and Confidentiality

- Social Media Privacy Policies

- Cloud Data Analysis

- Flink & Apache Spark Streaming Analysis

- Social Media Controlling

- Location Surveillance in Social Media

- Data Processing in YouTube

- Hadoop Image Reclamation

- Cyber Security Preventive Measures

- Parallel Processing & Distributed Databases

- Data Propagation And Attributes Privacy

Tools and techniques in technology play a vital role in every single process held down. You might have a question of what are all the tools are used in the Hadoop applications. Our experts are going to clarify your interrogations in the immediate section. Hadoop clusters can be progressed by many of the tools. For instance, Matlab and R tools are compatible with the working in the Hadoop YARN. Shall we get into that passage? Here we go!

Hadoop Tools for Big Data

- MapRejuice

- It is MapReduce Node.js based distributed client computing tool

- HaLoop

- It is the Hadoop allied iterative offshoot

- CouchDB

- It is the java scripted MapReduce view definer

- Mincemeat.py

- It is the python scripted framework of MapReduce

- Grid Grain

- It is theMapReduce open-source tool

- MARS

- It is the 3D graph NVidia MapReduce context

- MongoDB

- It is a MapReduce algorithm based cloud computing tool

These are the common tools used in the Hadoop applications so far. Every application is subject to its security and reliability so that, they have the significant security tools to implement their process uniquely. We know that you may need demonstrations in this section. Hence our researchers have drafted the next passage as the security tools of Hadoop for the ease of your understanding.

Comparison of Hadoop based Security Tools

- Apache Ranger

- Apache Sentry

- Apache Knox Gateway

The above-mentioned security tools’ functions are involved in the 4 processes for the enhancement of their process.

- Apache Ranger

- Data Verification: Centralized Security Control

- Data Approvals: Attribute / Role Control & Fine-Grained/Standard Approvals

- Audit: Integrated Audit

- Data Security: Encrypted Wires

- Apache Sentry

- Data Verification: Interconnected with Apache Knox

- Data Approvals: Role and Fine-Grained Approvals

- Audit: Non-compatible

- Data Security: Non-compatible

- Apache Knox Gateway

- Data Verification: Encapsulated Kerberos & Solitary Access points

- Data Approvals: Service Level Agreements (SLAs)

- Audit: Service Level Auditing

- Data Security: Non-compatible

The aforementioned passage is all about the comparison between the various security tools and their descriptions. We hope that you are getting the point. This article is mainly framed with simplicity to get the students a concentrated understanding of the Hadoop fields. Administrators of the devices may get ease from the barriers of the application configurations with the help of in-built parameters. Hence our experts wanted to illustrate to you the experimental setup for the Hadoop applications.

Experimental Setup for Hadoop Projects for Students

- Switches in the network are defined with 4 data nodes task tracker which has one Gigabyte Per Second (GBPS)

- In addition to that, it transmits the nodes with 10 Gigabyte Per Second (GBPS) to the master job tracker

- Schedulers are the controller of the Hadoop setup hence they accumulate the information regarding the data packet origination and their corresponding data locations

- Schedulers retrieve the information about the device details and evaluate the process timing

- Task trackers define the CPU, parameters, NW/IO properties

- Job trackers define the device, job, and their task implementation duration

These are the experimental setup factors that consisted of the Hadoop application. As well as you need to know about the configuration aspects of Hadoop. Why because it is an important thing to implement the Hadoop application on your own. In the following passage, we have listed the important element configurations for your better understanding.

Hadoop Configurations

- Work Load

- Micro Benchmarks: TERASORT & count of words

- Software

- Spark: 2.1.0 version

- Hadoop: 2.4.0 version

- JDK:1.7.0 version

- OS: 16.04.2 Ubuntu & 4.13.0 Linux version

- Configuration of Nodes

- Node Counts: 10

- Core CPU: (10*8) 80

- In-built Storage: (6*10) 60

- RAM: 32 GB

- OS: Intel-R Xeon(R) E3-1231V3@ 3.40GHz

- Configuration of Servers

- RAM: 64GB

- In-built Storage: 10 Terabyte(TB)

- Processor: 2.9 GHz

The above listed are the configuration details of the Hadoop applications in the fields of nodes, servers, software, and workload. We hope that you would have understood the aforementioned areas with proper hints. In addition to that, you need to educate yourself in the areas of configuration files and the parameters categories of the configuration. Shall we have a brief explanation in the next section? Come let’s have them in deep insight.

What are the Different Hadoop Configuration Files?

- Master and Slaves

- Hdfs-site.xml

- Yarn-site.xml

- Core-site.xml

- Mapred-site.xml

- Hadoop-env.sh

These files are subject to some of the important parameters for their effective configuration. They are explained below.

MapReduce Comparison Parameters

- Shuffle

- MapReduce.task.io.sort.factor: 15 to 60 tuned values

- MapReduce.reduce.shuffle.parellelcopies: 50 to 500 tuned values

- i/o.sort.factor: 512 to 2047 tuned values

- i/o.sort.mb: 25 to 100 tuned values

- Input Split

- Mapred.min.split.size and Mapred.max.split.size: 512, 1024, 256 MB and inbuilt of 128 MB

- MapReduce.reduce.cpu.vcores & memory: 4 and 8 GB

- Mapred.reduce.task: 25600 and 16384 MB

So far, we have discussed the important aspects consisted in the Hadoop applications with all the possible coverage. Now, we can have the comparison metrics for the Hadoop application to have a better understanding of it. Let’s have the important section but measuring comparison metrics in developing Hadoop projects for students.

Hadoop Comparison Metrics

- Task Features

- This parameter ensures the fulfillment of the local devices by allotting the tasks properly to each and every pool

- Configuration of Resource Pool

- It is the parameter in which resource types are subject to the duplications

- Configuration of the resource pool signifies the availability of the resources and their corresponding functions

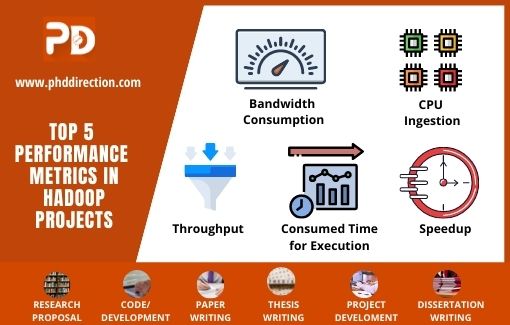

Performance of the Hadoop applications is usually held down following the 5 of the following performance metrics.

- Bandwidth Consumption

- CPU Ingestion

- Throughput

- Consumed Time for Execution

- Speedup

These performance metrics are evaluated by the number of jobs and number of nodes. We hope this article is covered and met our expectations well. If you need any assistance in the Hadoop projects then you can have our suggestions surely. Because doing Hadoop projects for students is our passion.

Let’s make your ideas with our innovations stand out from others!!!

Why Work With Us ?

Member Book

Publisher Research Ethics Business Ethics Valid

References Explanations Paper Publication

9 Big Reasons to Select Us

Senior Research Member

Our Editor-in-Chief has Website Ownership who control and deliver all aspects of PhD Direction to scholars and students and also keep the look to fully manage all our clients.

Research Experience

Our world-class certified experts have 18+years of experience in Research & Development programs (Industrial Research) who absolutely immersed as many scholars as possible in developing strong PhD research projects.

Journal Member

We associated with 200+reputed SCI and SCOPUS indexed journals (SJR ranking) for getting research work to be published in standard journals (Your first-choice journal).

Book Publisher

PhDdirection.com is world’s largest book publishing platform that predominantly work subject-wise categories for scholars/students to assist their books writing and takes out into the University Library.

Research Ethics

Our researchers provide required research ethics such as Confidentiality & Privacy, Novelty (valuable research), Plagiarism-Free, and Timely Delivery. Our customers have freedom to examine their current specific research activities.

Business Ethics

Our organization take into consideration of customer satisfaction, online, offline support and professional works deliver since these are the actual inspiring business factors.

Valid References

Solid works delivering by young qualified global research team. "References" is the key to evaluating works easier because we carefully assess scholars findings.

Explanations

Detailed Videos, Readme files, Screenshots are provided for all research projects. We provide Teamviewer support and other online channels for project explanation.

Paper Publication

Worthy journal publication is our main thing like IEEE, ACM, Springer, IET, Elsevier, etc. We substantially reduces scholars burden in publication side. We carry scholars from initial submission to final acceptance.